NVIDIA

Nvidia owes its surreal rise to AI

The Nvidia GTC conference will start soon, where the CEO of the American company, Jensen Huang, will reveal more details about how he sees the future of artificial intelligence and his company's role in the development and production of machine learning solutions that support AI.

The development and training of models like ChatGPT requires inexhaustible computational capacity, which Nvidia knew how to use with common sense and timing with the target processor of the data center, the A100, which costs more than ten thousand dollars, or its successor, the H100 – There is no doubt that at this year's event, the company will raise the bar even further in terms of computing power.

However, last summer, after the sudden collapse of the cryptomining industry, Nvidia found itself in a rather difficult situation extremely quickly.

How can AI revolutionize development work?...Or will there be automatic coding before autonomous driving? HWSW's IT career focused kraftie continues! series of events.

How can AI revolutionize development work? Or will there be automatic coding before autonomous driving? HWSW's IT career focused kraftie continues! series of events.

At this point, however, thanks to the sudden explosion of AI hype, the dominant players in the IT industry, including Microsoft and Google's parent company Alphabet, have announced that they are investing several (ten) billion dollars in the development of chatGPT-type systems, which suddenly put Nvidia in a new position.

The Santa Clara company, which is rapidly climbing the AI train, is by some polls the biggest player in the AI target processor market today, with a share of over 80% and virtually the only significant competitor, AMD.

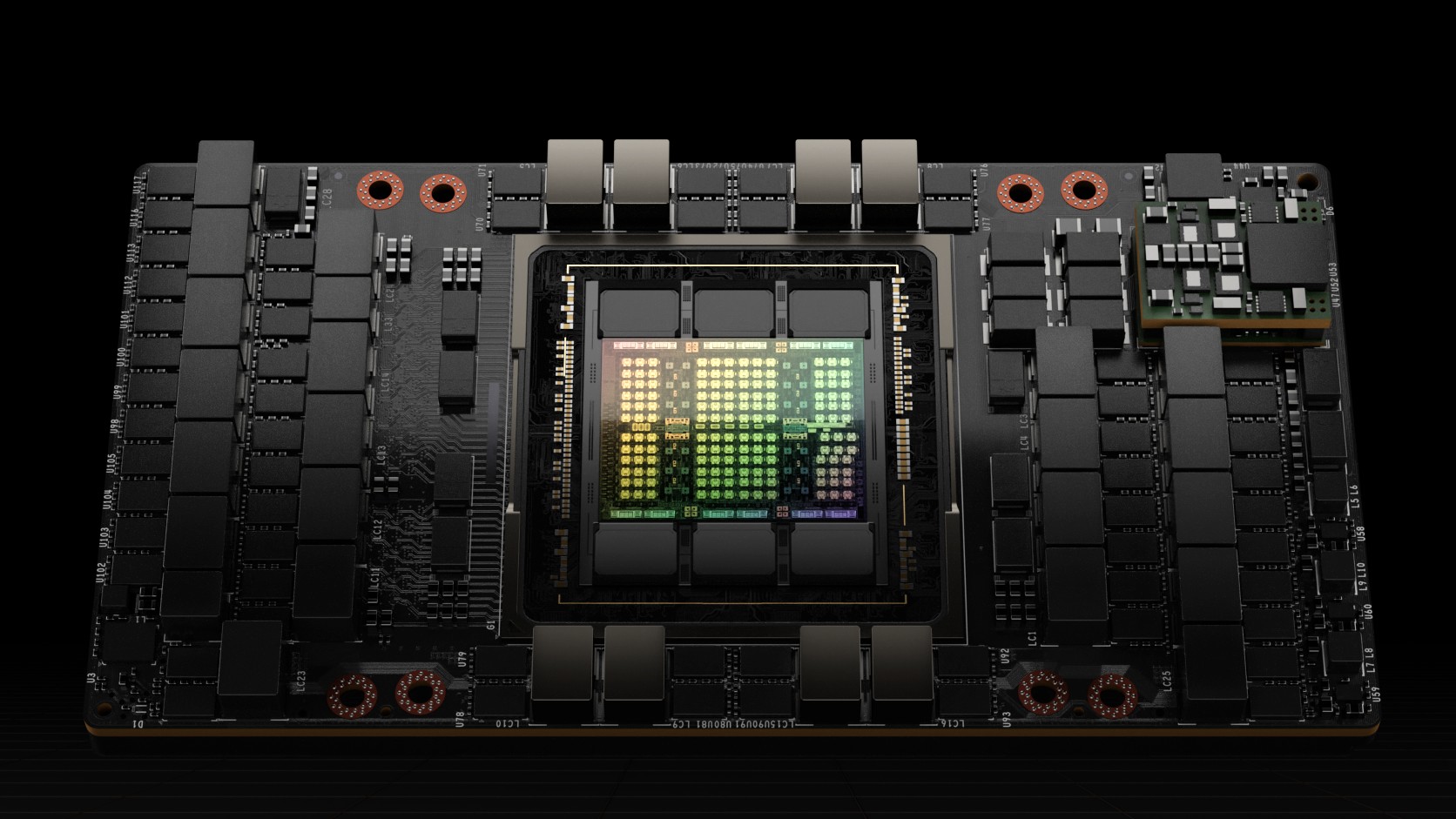

In the meantime, Nvidia has also made available the H100 (Hopper), considered the successor of the A100 (Ampere), debuted in last year's GTC, which is mainly designed to achieve a significant acceleration of the extremely popular Transformer learning model, therefore, teaching process can be accelerated up to six times, while thanks to the new generation Nvidia NVlink, 256 of these GPUs can be connected, providing bandwidth nine times faster than before.

By the way, an H100 GPU contains 80 billion transistors, and this is the first accelerator processor from the manufacturer that supports PCI Gen5 and HBM3 (High Bandwith Memory), so the bandwidth of system memory reaches 3TB/st . According to Nvidia, the new chip offers three times the performance of the previous generation A100 solution at FP16, FP32 and FP64 and six times the performance of 8-bit floating point operations. However, the system is severely limited in memory, due to the fact that the six-fold increase in FLOPS performance was accompanied by only a 1.65x increase in memory bandwidth.

In any case, the rapid increase in AI processing processor turnover has pushed Nvidia's value skyward again this year, which is currently up 77% from the start of the year, far outpacing the industry average. The company's market potential is clearly illustrated by the fact that Nvidia is currently worth four times as much as its direct rival, AMD, and more than 5.3 times as much as Intel, which lags far behind AI.

by: Koi Tamás

No comments:

Post a Comment